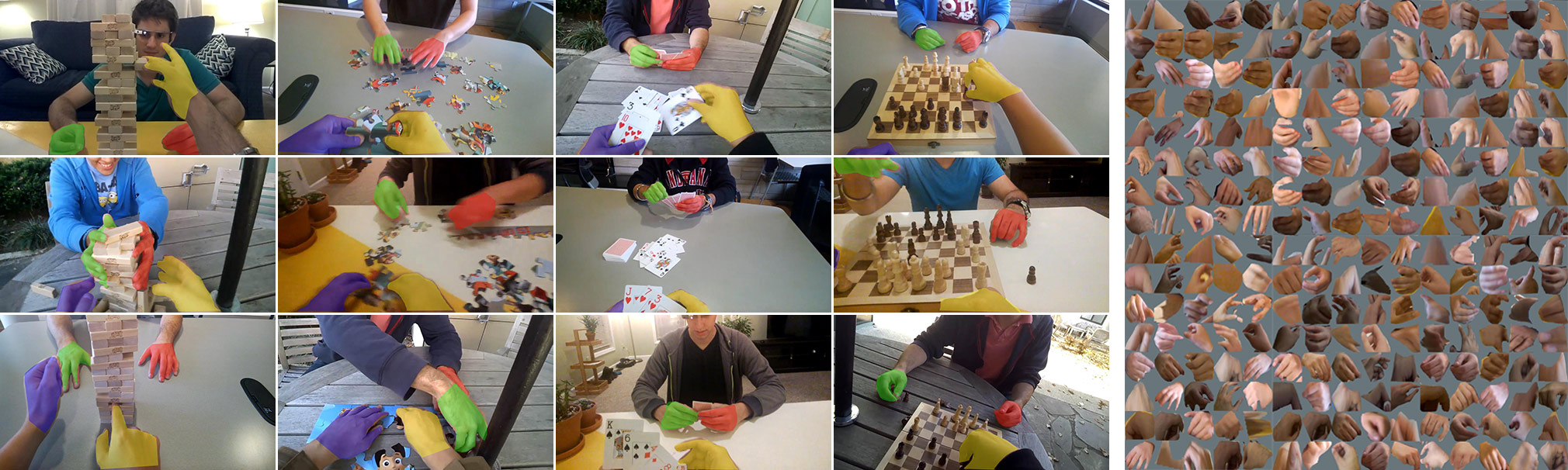

EgoHands contains 48 different videos of egocentric interactions with pixel-level ground-truth annotations for 4,800 frames and more than 15,000 hands!

Intro

The EgoHands dataset contains 48 Google Glass videos of complex, first-person interactions between two people. The main intention of this dataset is to enable better, data-driven approaches to understanding hands in first-person computer vision. The dataset offers

- high quality, pixel-level segmentations of hands

- the possibility to semantically distinguish between the observer’s hands and someone else’s hands, as well as left and right hands

- virtually unconstrained hand poses as actors freely engage in a set of joint activities

- lots of data with 15,053 ground-truth labeled hands

More detailed info about the structure of that dataset can be found in the README.txt file provided with the “Labeled Data” download below. If you have any questions, please contact Sven at sbambach[at]indiana[dot]edu. If you would like to use this data in your work you are more than welcome to as long as you cite our corresponding ICCV paper:

@InProceedings{Bambach_2015_ICCV,

author = {Bambach, Sven and Lee, Stefan and Crandall, David J. and Yu, Chen},

title = {Lending A Hand: Detecting Hands and Recognizing Activities in Complex Egocentric Interactions},

booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

month = {December},

year = {2015}

}

Downloads

| Name | Description | File Type (Size) | Link |

| Labeled Data | This archive contains all labeled frames as JPEG files (720x1280px). There are 100 labeled frames for each of the 48 videos for a total of 4,800 frames. The ground-truth labels consist of pixel-level masks for each hand-type and are provided as Matlab files for which we provide a simple API. More information is included in a README.txt file. This download should be all you need for most applications! | Zip Archive (1.3 GB) | Download |

| Video Files | All 48 videos as MP4 (h264) video files. Each video is 90 seconds long and has a resolution of 720x1280px at 30fps. | Zip Archive (2.2 GB) | Download |

| All Frames | All 48 videos with every single frame extracted as a JPEG file (720x1280px). Frames were extracted at 30fps such that each video (90 seconds) contains exactly 2,700 frames. This is a very large file! You probably only want to download this if you are interesting in applying a frame-based tracking method. You could also extract frames yourself based on the video files above, but the frames here are guaranteed to match our ground-truth data, i.e. frame_1234.jpg from these files will match frame_1234.jpg as provided with the ground-truth data above. | Zip Archive (8.2 GB) | Download |

Caffe Models

New! We provide our trained caffe models for egocentric hand classification/detection online. Both models take input via caffe’s window data layer. The first network classifies windows between hands and background, while the second network classifies windows between background and four different semantic hand types (own left/right hand and other left/right hand). Both networks are trained with the “main split” training data.

- Hand classification/detection network: Prototxt File | Caffemodel File

- Hand-type classification/detection Network: Prototxt File | Caffemodel File

Window Proposal Code

New! We now provide MATLAB code for the window proposal method as discussed in Section 4.1 of the paper. If you also download the dataset (Labeled Data) above, the provided code will learn sampling and skin-color parameters based on the training videos, as well as demonstrate how to apply the proposal method for unseen frames from the test videos. Simply download the file below and unzip it into the same directory as the dataset.

- Download: window_proposals.tar.gz

Example Videos

Embedded below is a play-list with four example videos from the dataset, one for each activity (puzzle, cards, Jenga, chess). The videos also demonstrate hand(-type) segmentation results from our ICCV paper, simply applied to each frame independently.