Tian Song, Stefan Lee, Tingyi Wanyan, Bardia Doosti, and David Crandall

Computer vision has progressed remarkably over the last few years, but fine-grained recognition tasks such as identifying specific species of trees or types of geological formations are still very difficult (due to, for example, the subtle visual cues that must be used, as well as limited training datasets). However, tasks are also very difficult for untrained humans without domain expertise. Computers have humans complementary strengths, however. Computers are very good at scanning through huge image libraries to find potential matches, but these potential matches are usually noisy. In contrast, humans are very slow at large-scale image matching, but even untrained observers can often verify whether two objects are really part of the same fine-grained class.

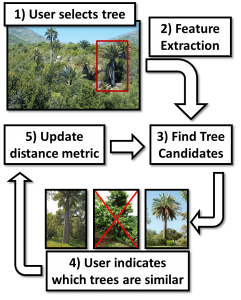

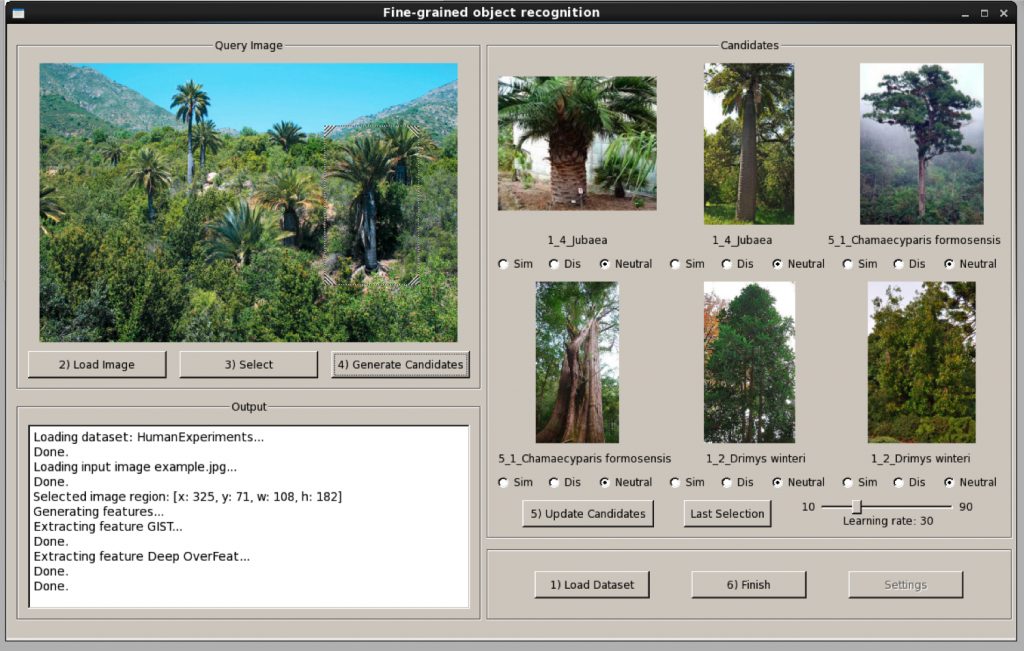

Our work combines these advantages into a tool that allows humans and computer to collaborate in order to solve fine-grained recognition tasks, with an accuracy that they are unable to achieve independently. We have created and publicly released a software tool for performing this human-in-the-loop clarification. The system requires a library of images of each category of interest. During an offline training phase, this labeled training data learns initial classifiers for each class. During the online, human-in-the-loop phase, the system presents potential matches and then asks the user to verify them (indicating whether each candidate match is good, poor, or neutral). Using this feedback, the system updates its internal classification models and presents a new set of candidates. The human and computer thus collaborate, back-and-forth, until they converge on an answer. We have successfully tested the tool on several recognition problems including fine-grained tree species, bird species, and rock types.

Left: Human-in loop recognition workflow. Right: Screenshot of human-in-loop tool.

Downloads

- Source code of the tool is available via GitHub. It requires Linux and specific versions of several libraries. See the ReadMe file for details.

Acknowledgements

This research was supported by the Intelligence Advanced Research Projects Activity (IARPA) via Air Force Research Laboratory, contract FA8650-12-C-7212. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright annotation thereon. Disclaimer: The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of IARPA, AFRL, or the U.S. Government.