Chenyou Fan, Jangwon Lee, Mingze Xu, Krishna Kumar Singh, Yong Jae Lee, David J. Crandall and Michael S. Ryoo

Abstract

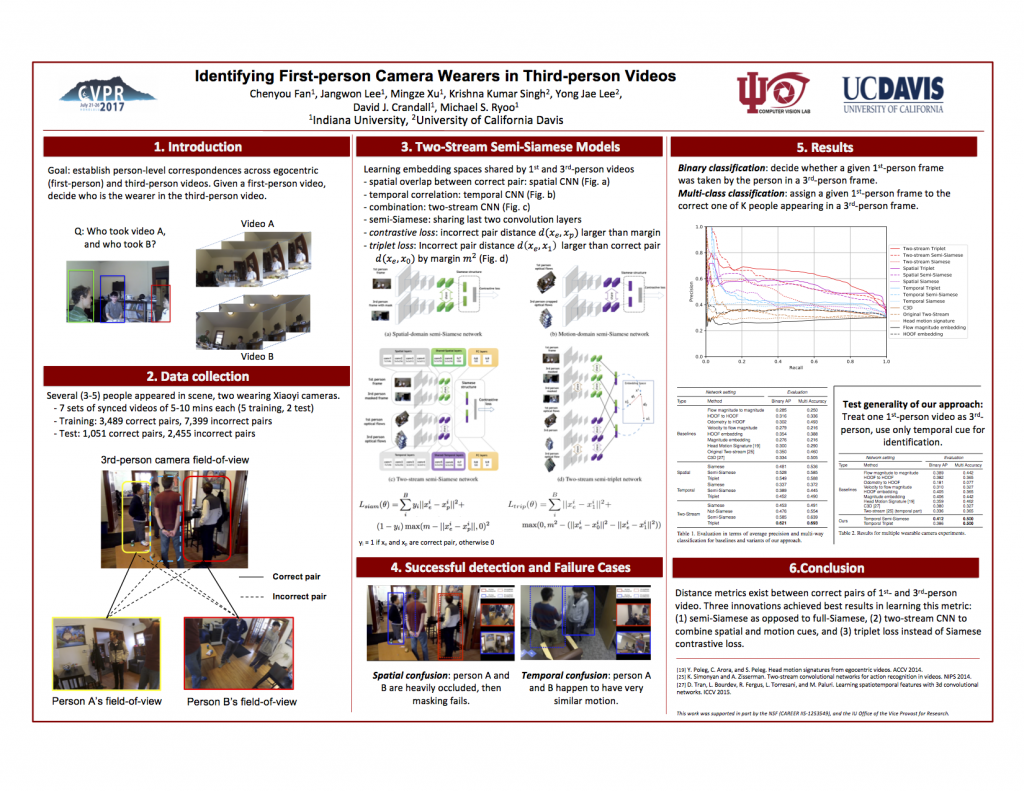

We consider scenarios in which we wish to perform joint scene understanding, object tracking, activity recognition, and other tasks in scenarios in which multiple people are wearing body-worn cameras while a third-person static camera also captures the scene. To do this, we need to establish person-level correspondences across first- and third-person videos, which is challenging because the camera wearer is not visible from his/her own egocentric video, preventing the use of direct feature matching. In this paper, we propose a new semi-Siamese Convolutional Neural Network architecture to address this novel challenge. We formulate the problem as learning a joint embedding space for first- and third-person videos that considers both spatial- and motion-domain cues. A new triplet loss function is designed to minimize the distance between correct first- and third-person matches while maximizing the distance between incorrect ones. This end-to-end approach performs significantly better than several baselines, in part by learning the first- and third-person features optimized for matching jointly with the distance measure itself.

Paper, Poster and Example Video

Citation

@inproceedings{firstthird2017cvpr,

title = {Identifying first-person camera wearers in third-person videos},

author = {Chenyou Fan and Jangwon Lee and Mingze Xu and Krishna Kumar Singh and Yong Jae Lee and David J. Crandall and Michael S. Ryoo},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2017}

}

Downloads

- Caffe model files. This repository is caffe implementation.

- 1st-3rd dataset. This dataset contains the images and optical flows used for 1st-3rd person matching. Seven videos are split to training and testing sets. You can file split here. The meta data of videos is here, which indicates videoNo, how many persons, third-person camera, camera wearer1, camera wearer2, and 1 or 2 others. Video frames and optical flows can be downloaded here or in separate files 2 3 4 5 6 7 8 . Not all frames have human bounding boxes. Please refer to split files to see which frames have bounding boxes. Note that we use whole 1st-person frames, and masked or cropped third-person frames on bounding box positions. All those masked/cropped frames should be found in our dataset provided here. Please refer to our paper for details. Please cite our paper if our dataset or model files do help in your work.

Acknowledgements

|

|

|

|||

| National Science Foundation |

Nvidia | IU Pervasive Technology Institute | IU Vice Provost for Research |