Chenyou Fan and David Crandall

Lifelogging cameras capture everyday life from a first-person perspective, but generate so much data that it is hard for users to browse and organize their image collections effectively. In this paper, we propose to use automatic image captioning algorithms to generate textual representations of these collections. We develop and explore novel techniques based on deep learning to generate captions for both individual images and image streams, using temporal consistency constraints to create summaries that are both more compact and less noisy.

We evaluate our techniques with quantitative and qualitative results, and apply captioning to an image retrieval application for finding potentially private images. Our results suggest that our automatic captioning algorithms, while imperfect, may work well enough to help users manage lifelogging photo collections. An expanded version of this paper is available here.

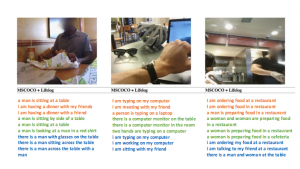

Figure 1: Sample captions generated by captioning technique with diversity regulation.

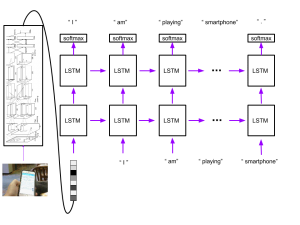

Figure 2: LSTM model for generating captions

Papers

If you find these papers useful, please cite them in your work as follows:

Downloads

Acknowledgements

|

|

|

|

|

|

| National Science Foundation |

Nvidia | Lilly Endowment | IU Pervasive Technology Institute | IU Vice Provost for Research |