Chenyou Fan and David Crandall

Lifelogging cameras capture everyday life from a first-person perspective, but generate so much data that it is hard for users to browse and organize their image collections effectively. In this paper, we propose to use automatic image captioning algorithms to generate textual representations of these collections. We develop and explore novel techniques based on deep learning to generate captions for both individual images and image streams, using temporal consistency constraints to create summaries that are both more compact and less noisy.

We evaluate our techniques with quantitative and qualitative results, and apply captioning to an image retrieval application for finding potentially private images. Our results suggest that our automatic captioning algorithms, while imperfect, may work well enough to help users manage lifelogging photo collections. An expanded version of this paper is available here.

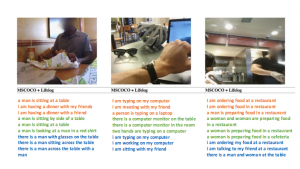

Figure 1: Sample captions generated by captioning technique with diversity regulation.

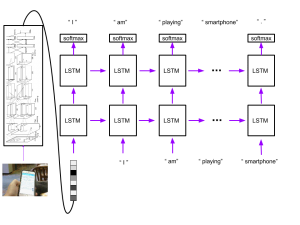

Figure 2: LSTM model for generating captions

Papers and presentations

BibTeX entries:

@article{deepdiary2018jvci,

journal = {Journal of Visual Communication and Image Representation},

title = {Deepdiary: Lifelogging image captioning and summarization},

author = {Chenyou Fan and Zehua Zhang and David Crandall},

year = {2018},

volume = {55},

month = {August},

pages = {40--55}

}

@inproceedings{deepdiary2016eccvw,

title = {DeepDiary: Automatically Captioning Lifelogging Image Streams},

author = {Chenyou Fan and David Crandall},

booktitle = {European Conference on Computer Vision International Workshop on Egocentric Perception, Interaction, and Computing (EPIC)},

year = {2016}

}

@techreport{deepdiary2016arxiv,

title = {{DeepDiary:} Automatic caption generation for lifelogging image streams},

author = {Chenyou Fan and David Crandall},

year = {2016},

institution = {arXiv 1606.07839}

}

Downloads

- Poster.

- Github Code Repository This repository is caffe implementation of image captioning on lifelogging data. Please check our paper and repository readme for more information of how to use this package on generating interesting and diverse sentences for your own photos.

- Lifelogging dataset This dataset contains the image VGG features and human labeling we collected during this project. Our github site has a detailed explanation of how to use the data files to train a human labeling model.

- AMT dataset We list a subset of our dataset with photos which we published on Amazon Mechanical Turk for public labeling.

Acknowledgements

|

|

|

|

|

|

| National Science Foundation |

Nvidia | Lilly Endowment | IU Pervasive Technology Institute | IU Vice Provost for Research |