Haipeng Zhang, Mohammed Korayem, Erkang You, David Crandall

Studying relationships between keyword tags on social sharing websites has become a popular topic of research, in order to both improve tag suggestion systems and to automatically find connections between the concepts that tags represent. Existing approaches to discovering tag relationships mainly rely on tag co-occurrences, ignoring the other sources of information available on social sharing websites. In this paper, we show how to find similar tags by comparing their distributions over time and space, discovering tags with similar geographic and temporal patterns of use. In particular, we apply this technique to find related keyword tags on a dataset of tens of millions of geo-tagged, time-stamped photos downloaded from Flickr. Geo-spatial, temporal and geo-temporal distributions of tags are extracted and represented as vectors which can then be compared and clustered. We show that we are able to successfully cluster Flickr photo tags based on their geographic and temporal patterns, and evaluate the results both qualitatively and quantitatively using a panel of human judges. A case study suggests that our visualizations of temporal and geographical semantics help humans recognize subtle semantic relationships between tags. This approach to finding and visualizing similar tags is potentially useful for exploring and aggregating any data having geographic and temporal annotations.

Results

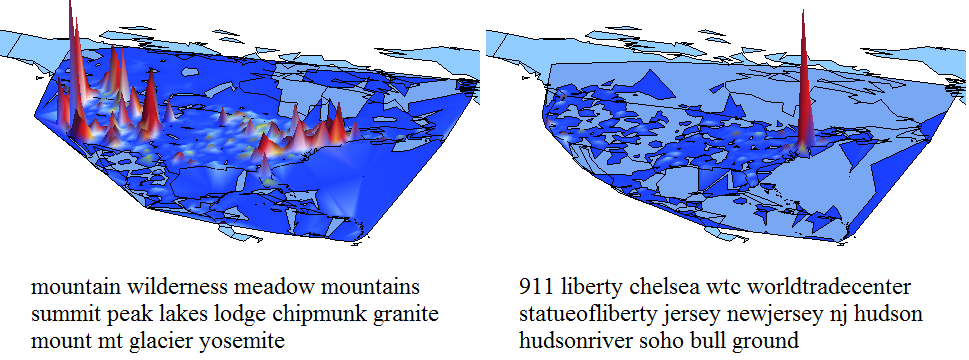

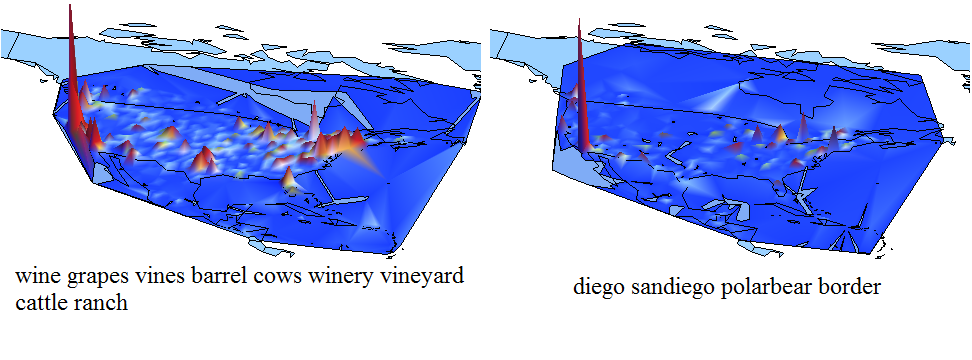

- Geo Clusters, ranked by average second moment, with visualizations

some examples:

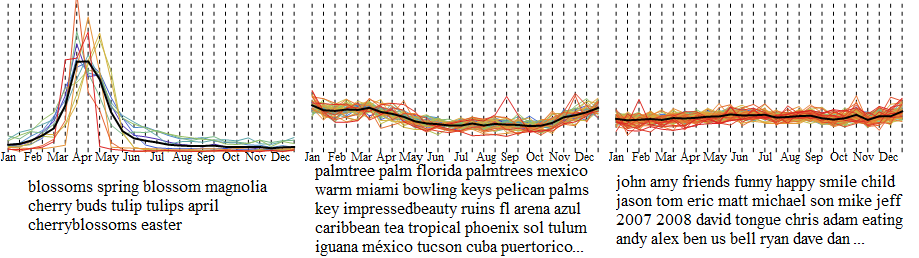

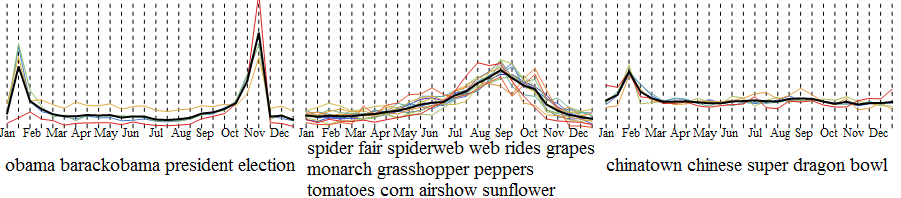

- Temporal clusters, ranked by average second moment, with visualizations

some examples:

- Motion clusters, ranked by average second moment

- Co-occurrence clusters

- Mutual-Information clusters

[papersandpresentations proj=socialmining:wsdm]

Acknowledgements:

We thank Prof. Andrew Hanson for discussions and advice on visualization, and the anonymous reviewers for their helpful comments. We also gratefully acknowledge the support of the following:

|

|

| Lilly Endowment | IU Data to Insight Center |