Kun Duan, Devi Parikh, David Crandall, and Kristen Grauman

NEW! Image features used to discover localized attributes are available!

We propose to model localized semantic visual attributes. Attributes are visual concepts that can be detected by machines, understood by humans, and shared across categories. They are particularly useful for fine-grained domains where categories are closely related to one other (e.g. bird species recognition). In such scenarios, relevant attributes are often local (e.g. “white belly”), but the question of how to choose these local attributes remains largely unexplored. In this project, we propose an interactive approach that discovers local attributes that are both discriminative and semantically meaningful from image datasets annotated only with fine-grained category labels and object bounding boxes. Our approach uses a latent conditional random field model to discover candidate attributes that are detectable and discriminative, and then employs a recommender system that selects attributes likely to be semantically meaningful. Human interaction is used to provide semantic names for the discovered attributes.

Figure 1. Sample local and semantically meaningful attributes automatically discovered by our approach. The names of the attributes are provided by the user-in-the-loop.

Approach

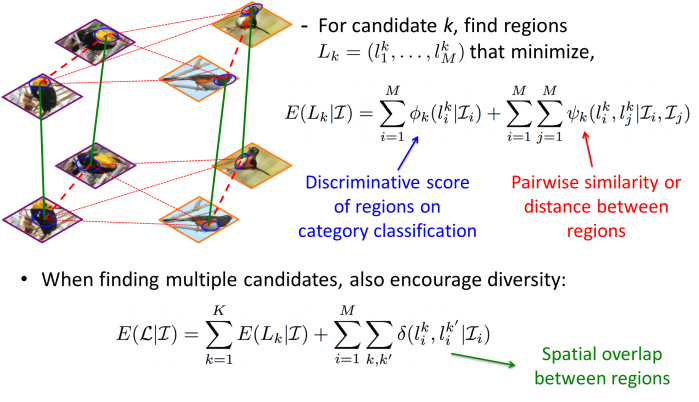

We search for an active split as two most similar categories using the attributes discovered so far. For each such active split, we model local attributes using latent conditional random field.

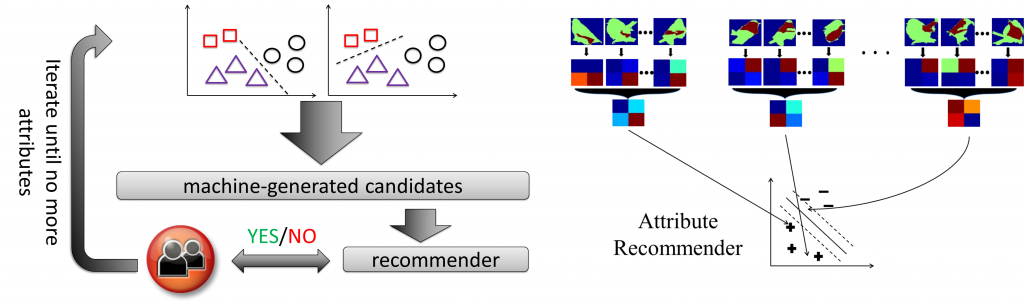

At each iteration, our approach automatically generates K candidates using the latent CRF model, and prioritizes attributes that are likely to be semantic via a recommender system. The candidates are then presented to human subjects, who either accept it (i.e. is semantically meaningful) or reject it. The user feedback is used to update the recommender system.

Figure 2. Left: we iteratively discover local attributes from a given image dataset. Right: We employ a recommender system measuring the spatial consistency of the attribute candidate on the object.

Results

We demonstrate our method on two challenging fine-grained image datasets, Caltech-UCSD Birds-200-2011 (CUB) and Leeds Butterflies (LB).

Discovered Local Attributes.

Click here to see a gallery of sample discovered local attributes on CUB dataset.

Click here to see a gallery of sample discovered local attributes on LB dataset.

Image-to-text Generation.

Click here to see a gallery of sample image annotation results on CUB dataset. Most of the images are unseen bird categories.

Attribute-based Image Classification.

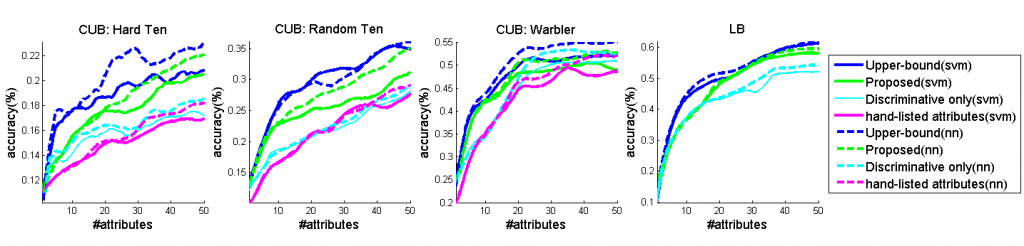

We find that our discovered attributes outperform those generated by traditional approaches.

Figure 3. Image classification performance on four datasets with SVM and nearest neighbor (nn) classifiers, and using four different attribute discovery strategies.

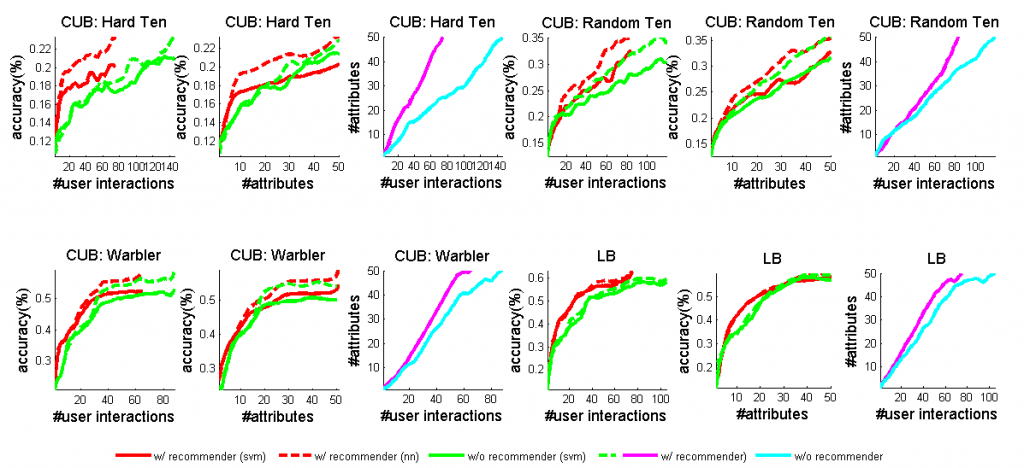

Figure 4. Classification performance of the Proposed system with and without using the recommender.

[papersandpresentations proj=recognition:attr:cvpr]

Download

NEW! We release the image features used to discover localized attributes on the Bird 200 dataset.

Read this and click here to download.

Acknowledgements

We gratefully acknowledge the support of the following:

|

|

|

|

| National Science Foundation |

Lilly Endowment | IU Data to Insight Center | Luce Foundation |