Mingze Xu, Chenyou Fan, Yuchen Wang, Michael S. Ryoo, and David J. Crandall

Abstract

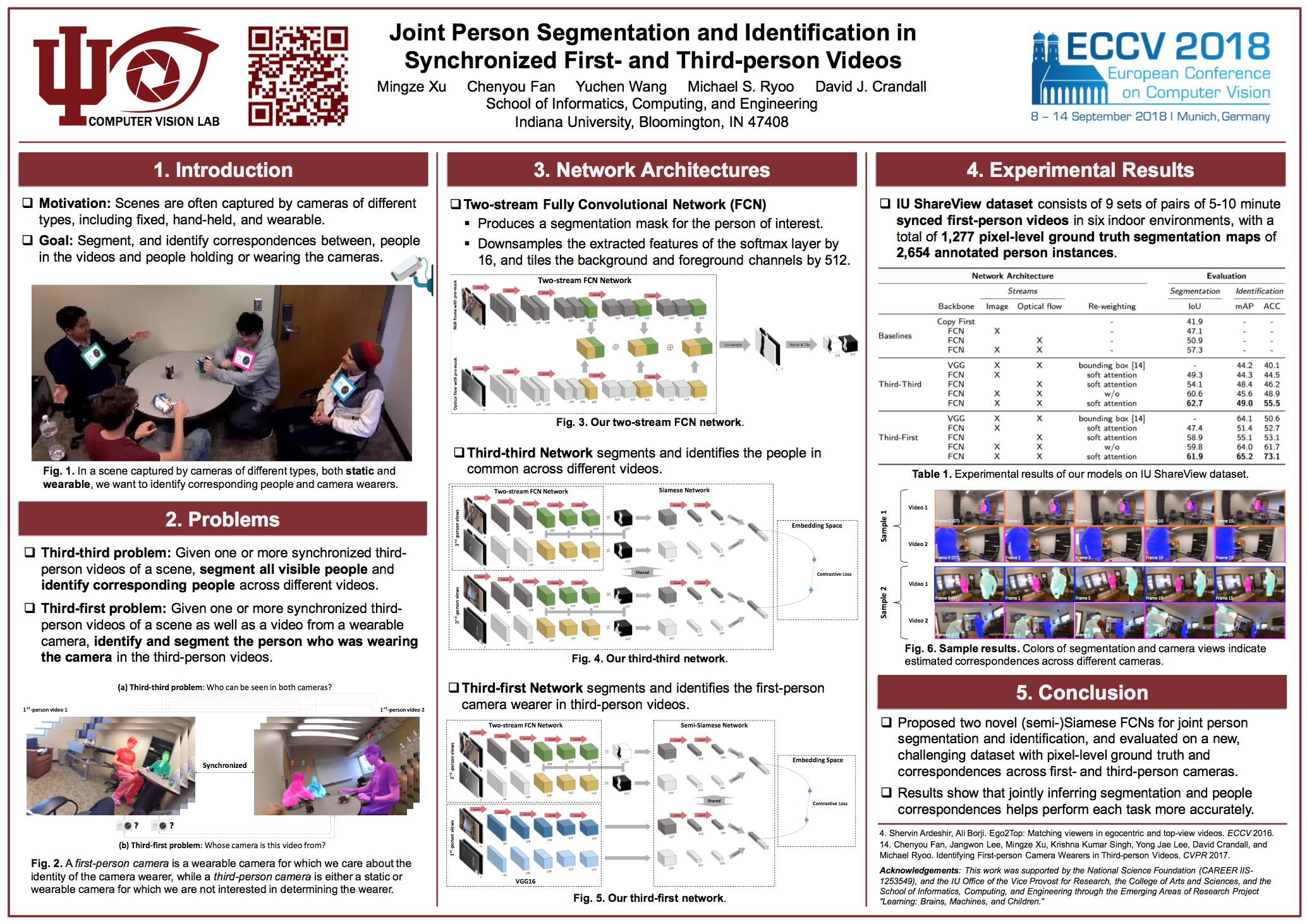

In a world of pervasive cameras, public spaces are often captured from multiple perspectives by cameras of different types, both fixed and mobile. An important problem is to organize these heterogeneous collections of videos by finding connections between them, such as identifying correspondences between the people appearing in the videos and the people holding or wearing the cameras. In this paper, we wish to solve two specific problems: (1) given two or more synchronized third-person videos of a scene, produce a pixel-level segmentation of each visible person and identify corresponding people across different views (i.e., determine who in camera A corresponds with whom in camera B), and (2) given one or more synchronized third-person videos as well as a first-person video taken by a mobile or wearable camera, segment and identify the camera wearer in the third-person videos. Unlike previous work which requires ground truth bounding boxes to estimate the correspondences, we perform person segmentation and identification jointly. We find that solving these two problems simultaneously is mutually beneficial, because better fine-grained segmentation allows us to better perform matching across views, and information from multiple views helps us perform more accurate segmentation. We evaluate our approach on two challenging datasets of interacting people captured from multiple wearable cameras, and show that our proposed method performs significantly better than the state-of-the-art on both person segmentation and identification.

For more details, please see our ECCV 2018 paper.

Poster & Demo

|

Downloads

August 2018 — PyTorch code for training and pre-trained models is now available!

August 2018 — IU ShareView dataset with pixel-level person annotations is now available!

IU ShareView dataset consists of 9 sets of two 5-10 minute first-person videos. Each set contains 3-4 participants performing a variety of everyday activities (shaking hands, chatting, eating, etc.) in one of six indoor environments. Each person in each frame is annotated with a ground truth bounding box and a unique person ID. To evaluate our methods on person segmentation, we manually augmented a subset of the dataset with pixel-level person annotations, for a total of 1,277 labeled frames containing 2,654 annotated person instances.

Citation

@InProceedings{Xu_2018_ECCV,

title = {Joint Person Segmentation and Identification in Synchronized First- and Third-person Videos},

author = {Xu, Mingze and Fan, Chenyou and Wang, Yuchen and Ryoo, Michael S. and Crandall, David J.},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2018}

}

Acknowledgements

We would like to acknowledge the support of the following:

|

| National Science Foundation |